Automated Remediation and Augmented Intelligence

Detect downed services, attempt to fix them before having a human do it, share with human operators attempted remediation steps, and make recommendations for human operators to try next.

Our Innovation and AI initiative was conceived to transform service delivery to improve the customer experience. Following our bold-but-calculated philosophy, we established a dedicated innovation group with the mission to leverage new and emerging technologies for transformational change with time to delivery as a key driver. Our innovation group evaluates, designs, and implements leading technologies leveraging the design principle of composable architecture. This has allowed us to pivot to superior technologies in an agile fashion, including IBM Watson, Gravity CX, Twilio Flex, IBM Datacap, Amazon Alexa, Google Assistant, Google Vision API, and New Relic.

These new technologies led to the development of Cleo, a virtual assistant powered by IBM Watson to support an omnichannel, natural language experience through web chat, text, phone, email, social media and voice technologies including Alexa and Google (until Google's sunset of custom voice integrations 2022).

Leveraging our AI capabilities further, we developed several other AI personas and solutions. These include a virtual assistant for supporting employees named YODA (Your Online Digital Assistant), an agent for help desk ticket parsing and routing, another for system reliability engineering, and an agent that extracts features from scanned documents.

There was significant opportunity to transform our Customer and Employee Experiences through the effective development and delivery of AI enabled services (digital workforce) that provide a private sector experience for our customers and improve operational efficiency. Specifically, we have developed and delivered AI services with IBM Watson powering an intelligent virtual assistant (IVA) named Cleo (omnichannel through text, voice, web, Alexa/Google, email and Facebook), an IVA to assist employees, an AI SRE Agent named Alfred to actively monitor and act on system events, and a quorum of AI for intelligent capture of data from court filings.

The Clerk of the Superior Court in Maricopa County deployed Cleo (the virtual agent that powers the website chat box), which uses IBM Watson technology to engage with citizens across many channels. Not only can you engage with her on our website chat, you can also access her help through Amazon Alexa, Google Assistant (until Google's sunset of custom voice integrations 2022) and SMS text messaging!

Get fast, accurate responses to your pressing concerns - whether you're looking for directions, office wait times, or have questions about our services.

IBM Watson is used for natural language understanding (NLU) in the form of intention and feature extraction as well as conversational reply. Watson connects to our IBM Cloud Functions that empower agents with capabilities beyond simple chat.

IBM Watson Voice Assistant is used for natural language understanding (NLU) of spoken voice (sound) rather than typed character based messages. Watson Voice utilizes speech to text (STT) to convert spoken language into character based messages for our agents to process. Replies back to the customer utilize text to speech (TTS) to speak back to them.

All customer service channels are supported by the same AI agent. This ensures a customer will receive the same response regardless of how they connect with us. That agent is trained each day on data from unhandled conversations for improved responses next time. We only utilize translation services on incoming messages to Cleo (things said to her). This is to support as many customers as possible. No translation services are used for the things Cleo says to our customers. All Spanish content is maintained by native speakers. This ensures no accidental misunderstandings to court related questions.

The Clerk of the Superior Court in Maricopa County deployed YODA, which uses IBM Watson technology to assist employees. Employees can locate useful links, obtain information on policies and procedures, and generate requests for assistance from our Employee Support Center.

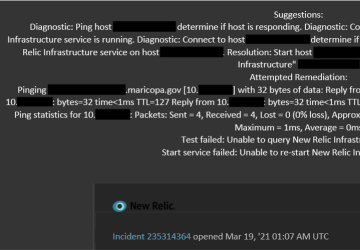

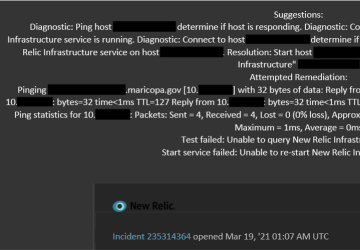

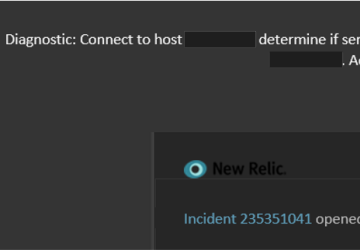

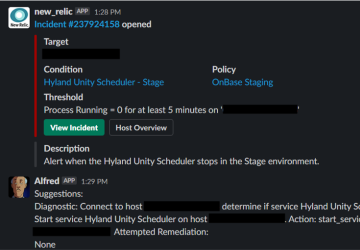

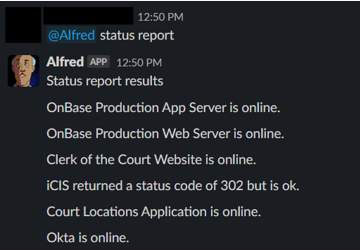

We also deployed an artificial intelligence system reliability engineer named Alfred. Alfred is able to interpret alerts from our New Relic AI and perform remediation actions accordingly, eliminating the need for human intervention. Where unsuccessful, Alfred alerts humans to take action and makes augmented intelligence recommendations for humans to follow.

To improve the employee experience and operational efficiency we have deployed Alfred as an artificial intelligence support center (help desk) agent. Our support center agent reviews new support requests and determines the category and team assignment based on content. We no longer have to rely on the customer to select the correct category. We can execute automated remediation based on the detection of those incoming requests to decrease our time to resolution.

New Relic AI ingests operational data from our application infrastructure and detects anomalies and correlates alerts to reduce noise and reduce alert fatigue before alerting us.

IBM Watson is used for natural language understanding (NLU) in the form of intention and feature extraction as well as conversational reply. Watson connects to our IBM Cloud Functions that empower agents with capabilities beyond simple chat.

Our New Relic + IBM Watson powered system reliability engineer or "SRE" affectionately named Alfred. We utilize the combination of New Relic AI for alert correlation and our own custom built AI SRE technologies powered by Watson to react to alerts as they occur.

Alfred can offer augmented intelligence suggestions through ChatOps for humans to execute in the event he is unable to help. Think of this like a tooltip for IT incidents.

New Relic AI ingests operational data from our application infrastructure and is able to detect anomalies, correlation alerts to reduce noise, and reduce alert fatigue.

Detect downed services, attempt to fix them before having a human do it, share with human operators attempted remediation steps, and make recommendations for human operators to try next.

Detect downed services, attempt to fix them before having a human do it, share with human operators attempted remediation steps, and make recommendations for human operators to try next.

Receive real-time recommendations in chat from our AI when alerts occur.

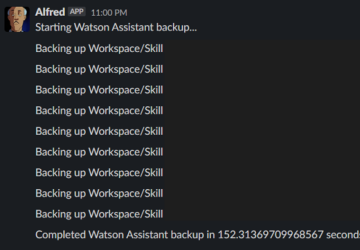

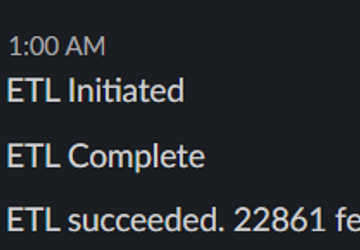

Receive team wide messages when processes or events occur.

Receive team wide messages when processes or events occur.

Get a damage report when you need it, from any device, at any time, to triage technology issues.

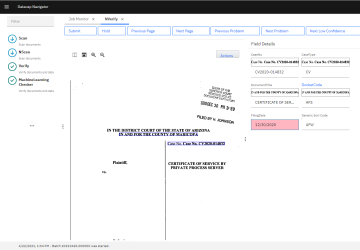

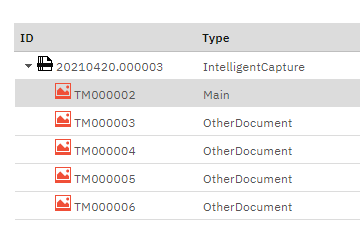

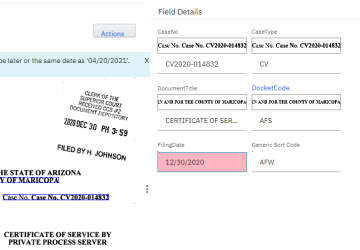

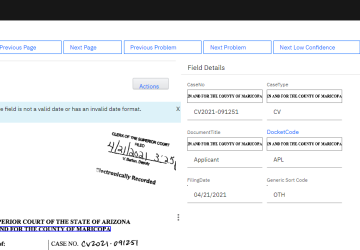

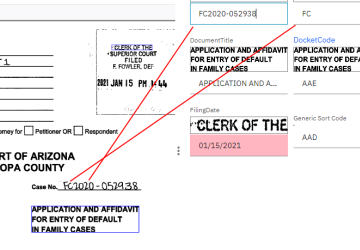

Intelligent Capture provides an immediate and significant opportunity to create operational efficiencies across the Judicial Branch and improve the customer experience by allowing the capture of data directly from scanned images. By reimagining the entire concept and existing operations related to imaging paper filings, we created the opportunity to leverage data, not documents, to automate the review, acceptance, and routing of filings. This automation supports our virtual workforce initiative so employees may work from home to process filings, mitigating current and ongoing concerns for COVID-19 and enhancing our position as an employer of choice given the ongoing desire for many employees to permanently work from home.

Our computer vision service called "The Great Eye" uses local edge AI in the form of OpenCV computer vision to attempt to detect anomalies that would indicate non-machine markings. Next it utilizes the Google Vision API to ICR, also called HWR or HCR (detecting handwriting), the contents for interpretation. Finally it leverages the vast knowledge already in Watson to extract feature data from the body of text. The use here of modular AI to supplement the shortcomings of any one AI is innovative. At any time, any component may be swapped out for superior technologies.

A key innovation is the quorum created by pairing our computer vision service and IBM's Datacap to agree upon the contents of a document. Together, they compare their confidence levels with one another to determine if a human should review the document. If one technology is able to extract details with greater reliability trust may be weighted more heavily towards a given AI with one chosen as the tie breaker for a given feature.

Exceptions and audits are handled by humans who are able to correct or redefine extracted features.

AI determines which page is the first page in a series of legal documents.

AI is able to read skewed text and extract meaning from it.

AI is able to read skewed handwritten text and extract meaning from it.

AI is able to read skewed handwritten text, extract meaning from it, and route documents based on content.

The Clerk of the Superior Court in Maricopa County deployed Cleo (the virtual agent that powers the website chat box), which uses IBM Watson technology to engage with citizens across many channels. Not only can you engage with her on our website chat, you can also access her help through Amazon Alexa, Google Assistant (until Google's sunset of custom voice integrations 2022) and SMS text messaging!

Get fast, accurate responses to your pressing concerns - whether you're looking for directions, office wait times, or have questions about our services.

All customer service channels are supported by the same language AI agent. This ensures a customer will receive the same response regardless of how they connect with us. That agent is trained each day on data from unhandled conversations for improved responses next time. We only utilize translation services on incoming messages to Cleo (things said to her). This is to support as many customers as possible. No translation services are used for the things Cleo says to our customers. All Spanish content is maintained by native speakers. This ensures no accidental misunderstandings to court related questions.

Accessible from any modern browser any time of day, an AI Persona Customer Service Agent with Human Support during business hours. Bilingual!

SMS or Text any time of day an AI Persona Customer Service Agent with Human Support during business hours. Bilingual!

Email any time of day an AI Persona Customer Service Agent with Human Support during business hours.

"Ask the Clerk's Office" via Amazon's Alexa enabled devices any time of day an AI Persona Customer Service Agent.

"Ask the Clerk's Office" via Google Assistant enabled devices any time of day an AI Persona Customer Service Agent.

Traditional telephone support any time of day an AI Persona Customer Service Agent with Human Support during business hours.

Using Facebook Messenger, have access to Cleo through social media any time of day.

Utilizing all of our emerging services imagine a full interactive experience guiding users through our capabilities.

PROTOTYPED!

Utilizing all of our emerging services imagine a full mobile experience with mobile filing capability.

IMAGINED!

| Channel | Description | Users/Sessions |

|---|---|---|

| Web Chat | Text based interactions the originates from our website on any device. | 10,489 |

| SMS | Text messages that originate from a mobile long or short code. | 79 |

| Voice First | Voice interactions that originate from an Amazon Alexa or a Google Assistant enabled device. | 27 |

| Voice Telephony | Voice interactions that originate from a voice telephone line. | 2 |

Customer support agents include data integrations that enhance their capabilities.

Customers are able to assess wait times at our various physical locations through integrations with our queue management system.

Cusomters are able to obtain turn by turn directions to any of our locations. AI agents are capable of suggesting locations that are closer to the customer and share their lobby wait times. The same mapping technology that gives our AI agents spatial awareness is also accessible by customers via our mapping application.

Employee support agents include data integrations that enhance their capabilities.

New Relic facilitates observability in the form of instrumentation, metrics, and alerts on applications and infrastructure.

Safe Software's FME facilitates the execution of complex data extractions that relieve humans from data manipulation tasks.

Nintex RPA facilitates the execution of complex automations that relieve humans from doing mundane or lengthy tasks.

Power BI facilitates the human evaluation of key performance metrics associated to our AI personas for continuous improvement.

How are customers and employees able to provide feedback and how is that feedback recognized?

Chat conversations are followed up with a customer satisfaction survey on select channels. Those surveys feed into key performance indicators.

Utterances or single messages sent to an AI agent are evaluated for negative responses. Those deemed frustrated are reviewed by human agents to determine enhancements required by our virtual AI agents.

Direct recommendations for review or content are evaluated by human agents to determine enhancements required by our virtual AI agents.

When employees make edits to data features captured by our Intelligent Capture solution those edits retrain our AI so that the next scanned document that matches the same criteria will be routed correctly.